Suranga Nanayakkara

Associate Professor

Suranga Nanayakkara is an Associate Professor at Department of Information Systems & Analytics, School of Computing, National University of Singapore (NUS). Before joining NUS, Suranga worked as an Associate Professor at Auckland Bioengineering Institute, University of Auckland. Prior to that, he was with Singapore University of Technology and Design (SUTD) as an Assistant Professor and Postdoctoral Associate at MIT Media Lab.

He received his PhD in 2010 and BEng in 2005 from the National University of Singapore. In 2011, he founded the "Augmented Human Lab" to explore ways of designing intelligent human-computer interfaces that extend the limits of our perceptual and cognitive capabilities.

Suranga is among that rare breed of engineers that has a sense of “humanity” in technology. This ranges from practical behavioral issues, understanding real-life contexts in which technologies function, and understanding where technologies can be not just exciting or novel, but have a meaningful impact on the way people live. The connection he feels to the communities in which he lives and with whom he works is a quality that will ensure his research will always have real relevance.

His work is most important to the people whose lives it most directly impacts: those who face challenged to function in the world due to sensory deficits in hearing or vision. What also makes Suranga’s contributions important is that they are not only applicable to those specific communities. Because of his emphasis on “enabling” rather than “fixing,” the technologies that Suranga has developed have a potentially much broader range of applications.

Suranga is a Senior Member of ACM and has been involved in a number of roles, including General Chair of Augmented Human Conference in 2015 and on many review and program committees including SIG CHI, TEI and UIST. With publications in prestigious conferences, demonstrations, patents, media coverage and real-world deployments, Suranga has demonstrated the potential of advancing the state-of-the art in Assistive Human-Computer Interfaces. For the totality and breadth of achievements, he has won many awards including young inventor under 35 (TR35 award) in the Asia Pacific region by MIT TechReview, Outstanding Young Persons of Sri Lanka (TOYP), and INK Fellowship 2016.

Projects

Toro

Stakco

SoniPhy

SonicVista

SeEar

Kavy

iTILES

EMO-KNOW

DroneBuddy

AiSee

Hopu

Crowd-Eval-Audio

Audio-Morph GAN

XRtic

VRHook

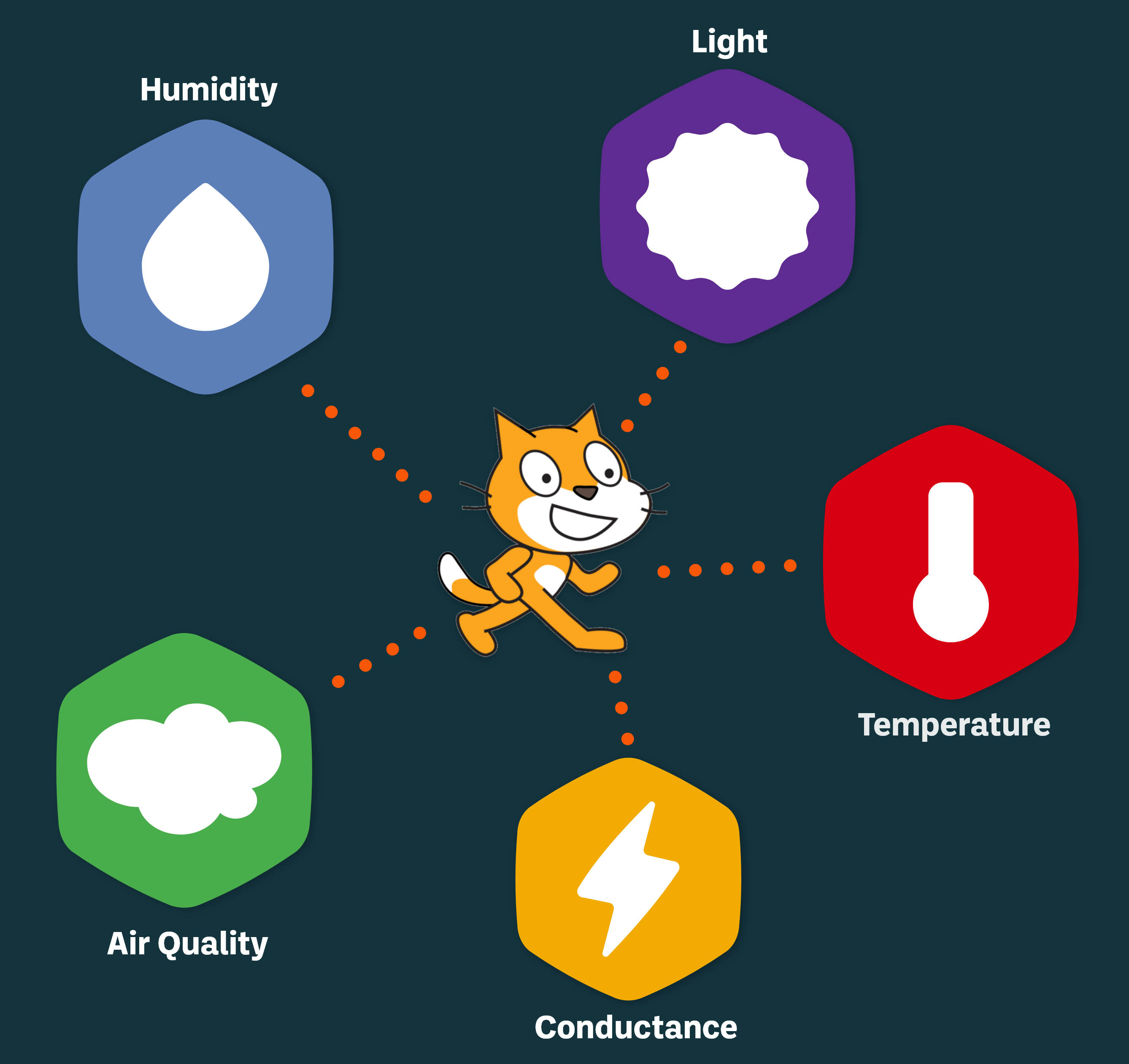

Scratch and Sense

Kiwrious

Affect in the Wild

TickleFoot

StressShoe

OM

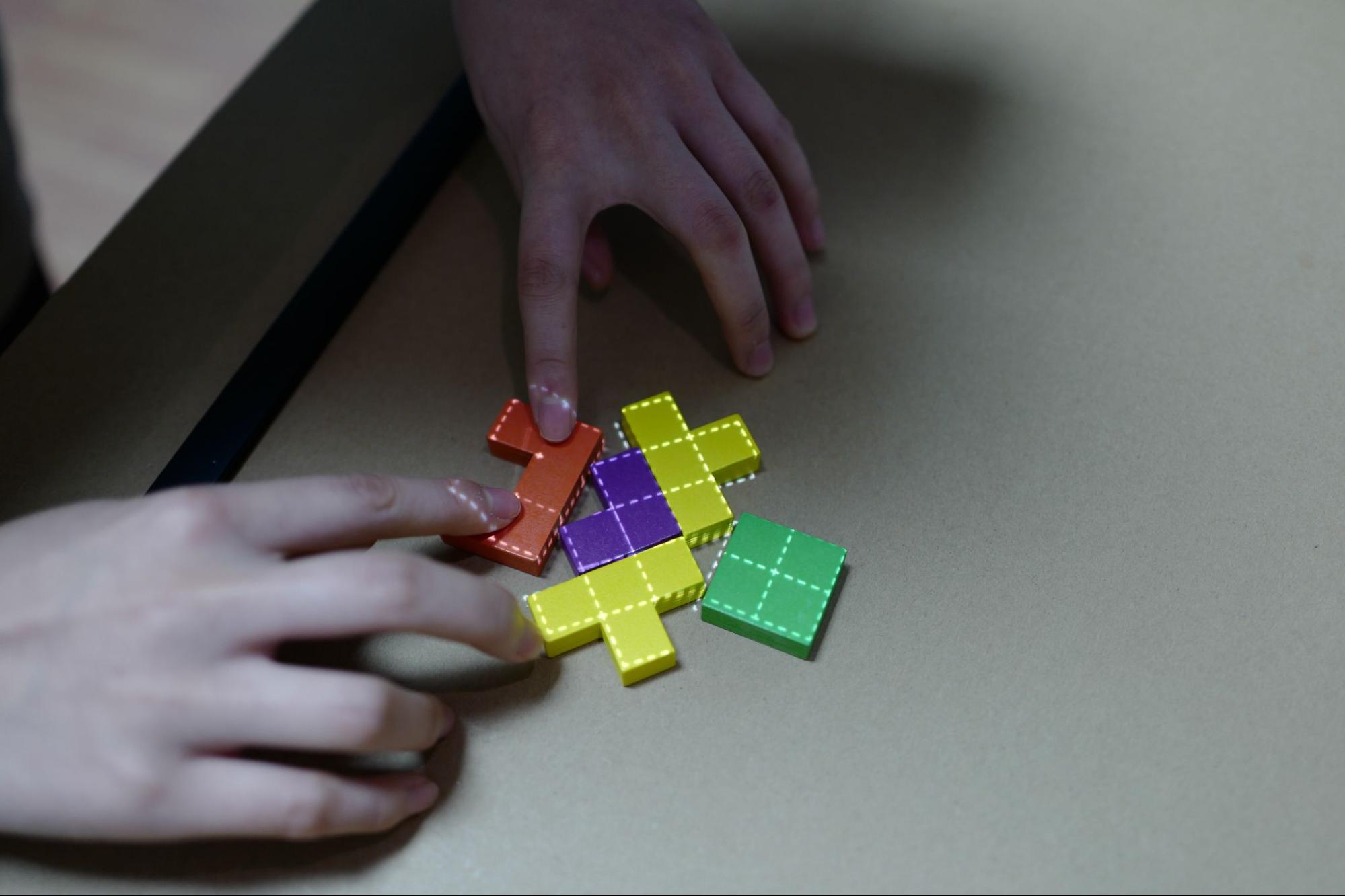

MagicBLOCKS

LightSense

KinVoice

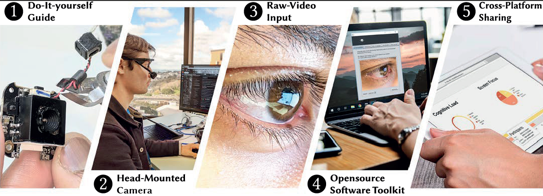

EyeKnowYou

ClothTiles

ANISMA

VersaTouch

StressFoot

RainbowHub

Prompto

PhantomTouch

Jammify

ActualTouch

Prospero

M-Hair

GymSoles

ChewIt

Target Driven Navigation

Step Detection Algorithm

ProspecFit

oSense

LightTank

fSense

Evaluating IVR in schools

CricketCoach

CompRate

CapMat

ABI FoodCam

2bit-Tactile Hand

Thumb in Motion

MuSS-Bits

Kyanite

InSight

GestAKey

GesCAD

FingerReader

Doodle Daydream

ChaddyBuddy

zSense

WaveSense

SwimSight

StickAmps

PostBits

Knoctify

ArmSleeve

The RIBbon

SonicSG

SHRUG

RippleTouch

FingerReader-v0

Feel the Globe

BWard

SparKubes

PaperPixels

nZwarm

Keepers & Bees

iSwarm

ircX

I-Draw

Foot.Note

EarPut

StickEar

SpiderVision

SmartFinger

FingerDraw

Birdie

Augmented Forearm

WatchMe

SpeechPlay

Sparsh

EyeRing

SUTDFoodCam

SoundFloor

Sensei

HapticChair

Publications

VR.net: A Real-world Dataset for Virtual Reality Motion Sickness Research

Wen, E., Gupta, C., Sasikumar, P., Billinghurst, M., Wilmott, J., Skow, E., Dey, A., Nanayakkara, S.C. VR.net: A Real-world Dataset for Virtual Reality Motion Sickness Research. The 31st IEEE Conference on Virtual Reality and 3D User Interfaces, 2024. Best Paper Award!

Sound Designer-Generative AI Interactions: Towards Designing Creative Support Tools for Professional Sound Designers

Kamath, P., Morreale, F., Bagaskara, P.L., Wei, Y., and Nanayakkara, S.C. 2024. Sound Designer-Generative AI Interac- tions: Towards Designing Creative Support Tools for Professional Sound Designers. In Proceedings of the CHI Conference on Human Factors in Computing Systems (CHI ’24), May 11–16, 2024, Honolulu, HI, USA.

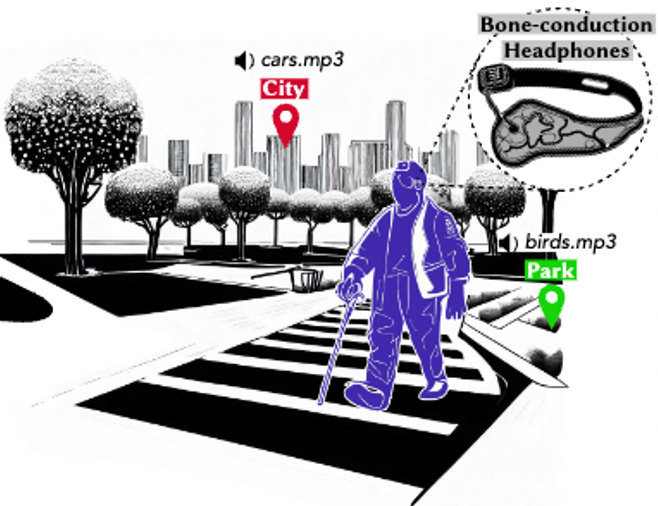

SonicVista: Towards Creating Awareness of Distant Scenes through Sonification

Gupta, C., Sridhar, S., Matthies, D.J., Jouffrais, C. and Nanayakkara, S.C., 2024. SonicVista: Towards Creating Awareness of Distant Scenes through Sonification. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 8(2), pp.1-32.

SeEar: Tailoring Real-time AR Caption Interfaces for Deaf and Hard-of-Hearing (DHH) Students in Specialized Educational Settings

Samaradivakara, Y., Ushan, T., Pathirage, A., Sasikumar, P., Karunanayaka, K., Keppitiyagama, C., Nanayakkara, S.C, 2024. SeEar: Tailoring Real-time AR Caption Interfaces for Deaf and Hard-of-Hearing (DHH) Students in Specialized Educational Settings. In Extended Abstracts of the CHI Conference on Human Factors in Computing Systems (CHI EA ’24), May 11–16, 2024, Honolulu, HI, USA.

Exploring an Extended Reality Floatation Tank Experience to Reduce the Fear of Being in Water

Montoya, M.F., Qiao, H., Sasikumar, P., Elvitigala, D.S., Pell, S.J., Nanayakkara, S.C, Mueller, F. ‘Floyd’, 2024. Exploring an Extended Reality Floatation Tank Experience to Reduce the Fear of Being in Water. In Proceedings of the CHI Conference on Human Factors in Computing Systems (CHI ’24), May 11–16, 2024, Honolulu, HI, USA.

Example-Based Framework for Perceptually Guided Audio Texture Generation

Kamath, P., Gupta, C., Wyse, L., and Nanayakkara, S.C., “Example-Based Framework for Perceptually Guided Audio Texture Generation,” in IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 32, pp. 2555-2565, 2024.

CoTacs: A Haptic Toolkit to Explore Effective On-Body Haptic Feedback by Ideating, Designing, Evaluating and Refining Haptic Designs Using Group Collaboration

Messerschmidt, M. A., Cortes, J. P. F., & Nanayakkara, S.C. (2024). CoTacs: A Haptic Toolkit to Explore Effective On-Body Haptic Feedback by Ideating, Designing, Evaluating and Refining Haptic Designs Using Group Collaboration. International Journal of Human–Computer Interaction, 1–21.

Using Sensor-Based Programming to Improve Self-Efficacy and Outcome Expectancy for Students from Underrepresented Groups

Suriyaarachchi, H., Nassani, A., Denny, P. and Nanayakkara, S.C. 2023. Using Sensor-Based Programming to Improve Self-Efficacy and Outcome Expectancy for Students from Underrepresented Groups. In Proceedings of the 2023 Conference on Innovation and Technology in Computer Science Education V. 1 (ITiCSE 2023). Association for Computing Machinery, New York, NY, USA, 187–193.

Towards Controllable Audio Texture Morphing

Gupta C*., Kamath P*., Wei. Y., Li, Z., Nanayakkara S.C., Wyse L. 2023. Towards Controllable Audio Texture Morphing. In 48th IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP '23), 4-10 June, 2023, Rhodes Island, Greece.

Tidd: Augmented Tabletop Interaction Supports Children with Autism to Train Daily Living Skills

Wu, Q., Wang, W., Liu, Q., Cao, J., Xu, D. and Nanayakkara, S.C., 2023. Tidd: Augmented Tabletop Interaction Supports Children with Autism to Train Daily Living Skills. In ACM SIGGRAPH 2023 Posters (pp. 1-2).

Striving for Authentic and Sustained Technology Use in the Classroom: Lessons Learned from a Longitudinal Evaluation of a Sensor-Based Science Education Platform

Chua, Y., Cooray, S., Cortes, J.P.F., Denny, P., Dupuch, S., Garbett, D.L., Nassani, A., Cao, J., Qiao, H., Reis, A, Reis, D., Scholl, P.M., Sridhar, P.K., Suriyaarachchi, H., Taimana, F., Tang, V., Weerasinghe, C., Wen, E., Wu, M., Wu, Q., Zhang, H., Nanayakkara, S.C. 2023. Striving for Authentic and Sustained Technology Use In the Classroom: Lessons Learned from a Longitudinal Evaluation of a Sensor-based Science Education Platform. International Journal of Human–Computer Interaction (IJHCI).

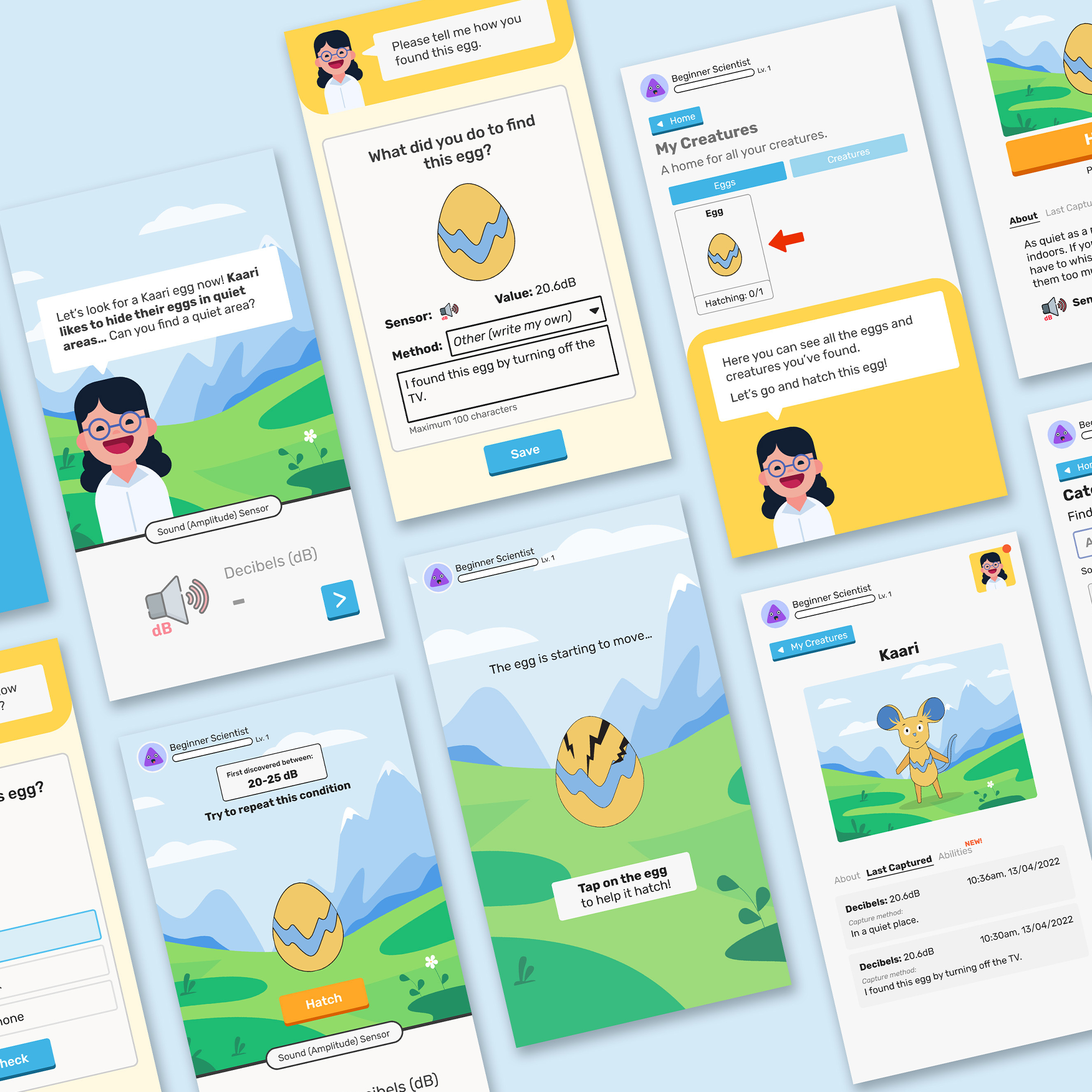

Snatch and hatch: Improving receptivity towards a nature of science with a playful mobile application

Qiao, H., Suriyaarachchi, H., Cooray, S. and Nanayakkara, S.C. 2023, June. Snatch and hatch: Improving receptivity towards a nature of science with a playful mobile application. In Proceedings of the 22nd Annual ACM Interaction Design and Children Conference (pp. 278-288).

Know Thyself: Improving Interoceptive Ability Through Ambient Biofeedback in the Workplace

Chua, P., Agres, K. and Nanayakkara, S.C., 2023. Know Thyself: Improving Interoceptive Ability Through Ambient Biofeedback in the Workplace.

Improving the Domain Adaptation of Retrieval Augmented Generation (RAG) Models for Open Domain Question Answering

Siriwardhana, S., Weerasekera, R., Wen, E., Kaluarachchi, T., Rana, R. and Nanayakkara, S.C., 2023. Improving the Domain Adaptation of Retrieval Augmented Generation (RAG) Models for Open Domain Question Answering. Transactions of the Association for Computational Linguistics.

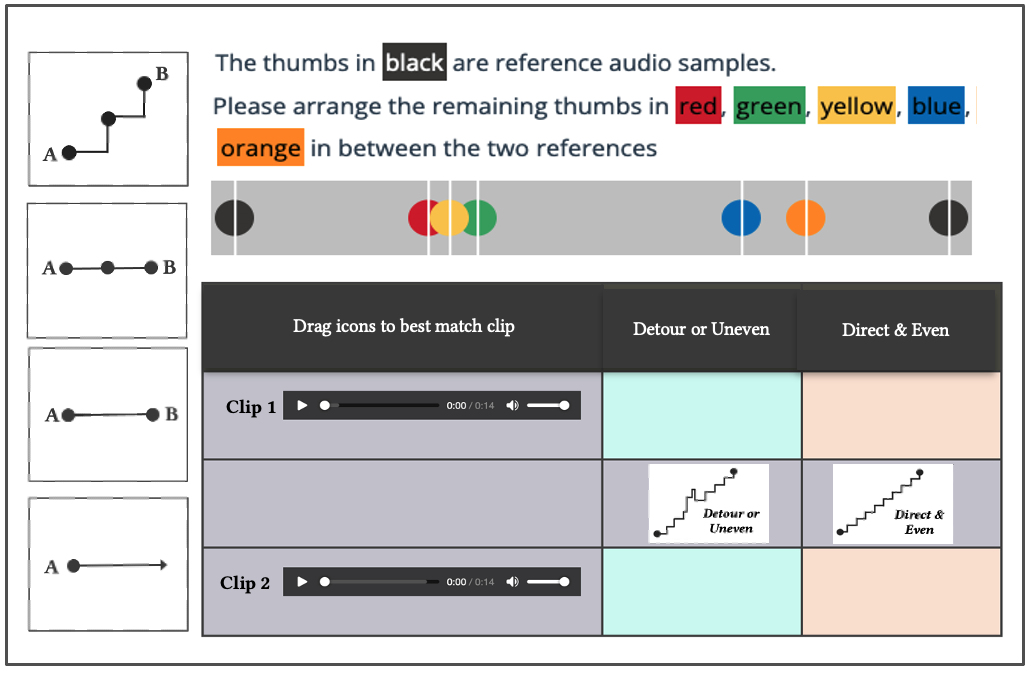

Evaluating Descriptive Quality of AI-Generated Audio Using Image-Schemas

Kamath, P., Li, Z., Gupta, C., Jaidka, K., Nanayakkara, S.C. and Wyse, L. 2023. Evaluating Descriptive Quality of AI Generated Audio Using Image-Schemas. In 28th International Conference on Intelligent User Interfaces (IUI ’23), March 27–31, 2023, Sydney, NSW, Australia.

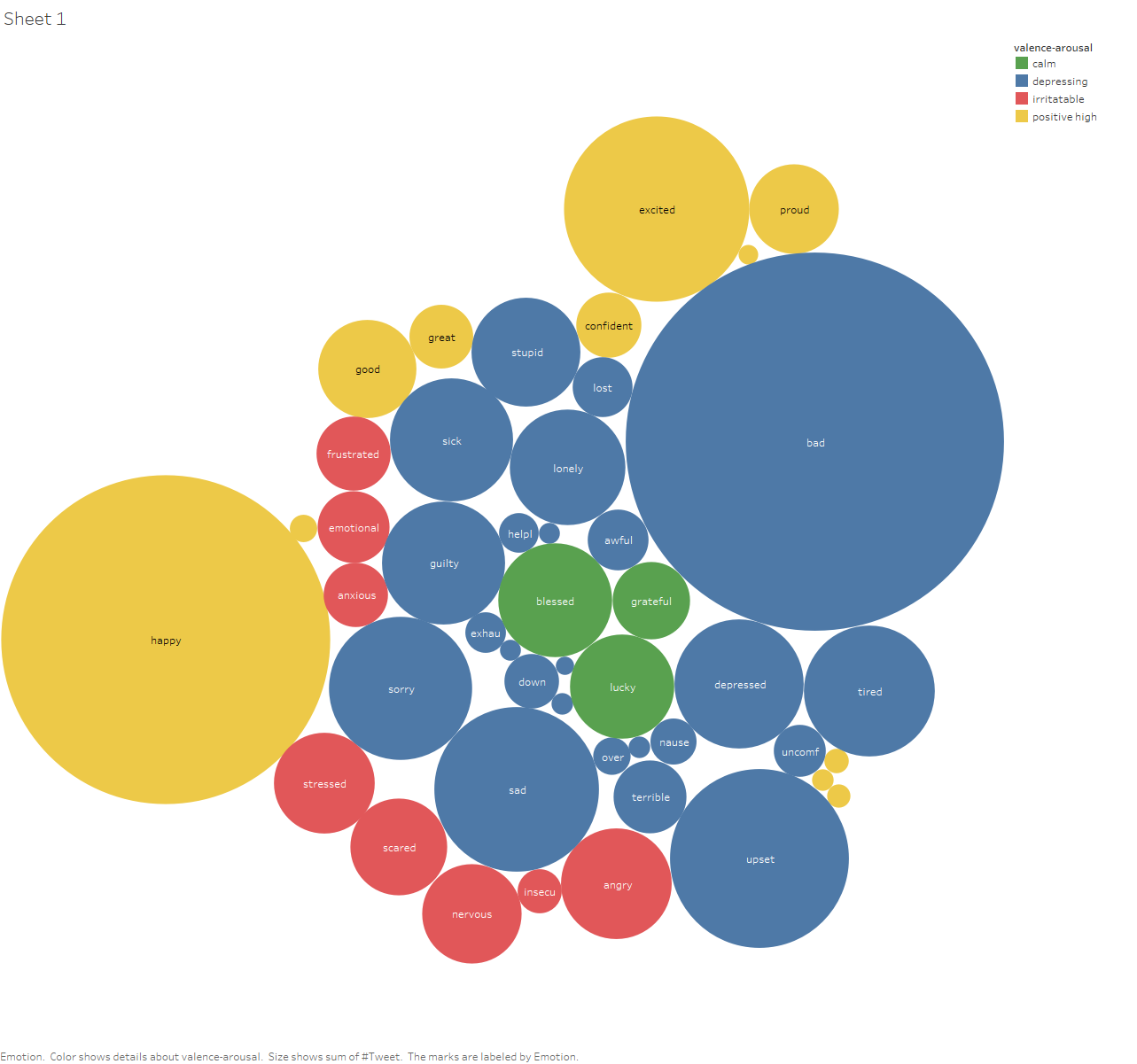

EMO-KNOW: A Large Scale Dataset on Emotion-Cause

Nguyen, M., Samaradivakara, Y., Sasikumar, P., Gupta, C. and Nanayakkara, S.C., 2023, December. EMO-KNOW: A Large Scale Dataset on Emotion-Cause. In Findings of the Association for Computational Linguistics: EMNLP 2023 (pp. 11043-11051).

Can AI Models Summarize Your Diary Entries? Investigating Utility of Abstractive Summarization for Autobiographical Text.

Siriwardhana, S*., Gupta, C.*, Kaluarachchi, T., Dissanayake, V., Ellawela, S., & Nanayakkara, S.C. (2023). Can AI Models Summarize Your Diary Entries? Investigating Utility of Abstractive Summarization for Autobiographical Text. International Journal of Human–Computer Interaction, 1–19.

A Corneal Surface Reflections-Based Intelligent System for Lifelogging Applications

Kaluarachchi, T., Siriwardhana, S., Wen, E., & Nanayakkara, S.C. 2023. A Corneal Surface Reflections-Based Intelligent System for Lifelogging Applications. In International Journal of Human–Computer Interaction.

XRtic: A Prototyping Toolkit for XR Applications using Cloth Deformation

Muthukumarana, S., Nassani, A., Park, N., Steimle, J., Billinghurst, B., and Nanayakkara, S.C., XRtic: A Prototyping Toolkit for XR Applications using Cloth Deformation. In 2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), IEEE, 2022.

WasmAndroid: A Cross-Platform Runtime for Native Programming Languages on Android

Wen, E., Weber, G. and Nanayakkara, S.C., 2022. WasmAndroid: A Cross-Platform Runtime for Native Programming Languages on Android. ACM Transactions on Embedded Computing Systems (TECS).

VRhook: A Data Collection Tool for VR Motion Sickness Research

Wen, E., Kaluarachchi, T., Siriwardhana, S., Tang, V., Billinghurst, M., Lindeman, R.W., Yao, R., Lin, J. and Nanayakkara, S.C., 2022. VRhook: A Data Collection Tool for VR Motion Sickness Research. In The 35th Annual ACM Symposium on User Interface Software and Technology (UIST ’22), October 29-November 2, 2022, Bend, OR, USA.

Troi: Towards Understanding Users Perspectives to Mobile Automatic Emotion Recognition System in Their Natural Setting

Dissanayake V., Tang V., Elvitigala D.S., Wen E., Wu M., Nanayakkara S.C. 2022. Troi: Towards Understanding Users Perspectives to Mobile Automatic Emotion Recognition System in Their Natural Setting. In Proceedings of the 24th International Conference on Mobile Human-Computer Interaction.

Toro: A Web-based Tool to Search, Explore, Screen, Compare and Visualize Literature

Messerschmidt, M., Chan, S., Wen, E. and Nanayakkara, S.C., 2022. Toro: A Web-based Tool to Search, Explore, Screen, Compare and Visualize Literature.

TickleFoot: Design, Development and Evaluation of a Novel Foot-tickling Mechanism that Can Evoke Laughter

Elvitigala D.S., Boldu R., Nanayakkara S.C., and Matthies D.J.C. 2022. TickleFoot: Design, Development and Evaluation of a Novel Foot-Tickling Mechanism That Can Evoke Laughter. ACM Trans. Comput.-Hum. Interact. 29, 3, Article 20 (June 2022), 23 pages.

SigRep: Towards Robust Wearable Emotion Recognition with Contrastive Representation Learning.

Dissanayake, V., Seneviratne, S., Rana, R., Wen, E., Kaluarachchi, T., Nanayakkara, S.C., (2022). SigRep: Towards Robust Wearable Emotion Recognition with Contrastive Representation Learning. IEEE Access

Self-supervised Representation Fusion for Speech and Wearable Based Emotion Recognition

Dissanayake V., Seneviratne S., Suriyaarachchi H., Wen E., Nanayakkara S.C. 2022. Self-supervised Representation Fusion for Speech and Wearable Based Emotion Recognition. In Proceedings of Interspeech 2022

Scratch and Sense: Using Real-Time Sensor Data to Motivate Students Learning Scratch

Suriyaarachchi, H., Denny, P. and Nanayakkara, S.C., 2022. Scratch and Sense: Using Real-Time Sensor Data to Motivate Students Learning Scratch. In Proceedings of the 53rd ACM Technical Symposium on Computer Science Education V. 1 (SIGCSE 2022), March 3–5, 2022, Providence, RI, USA.

Primary School Students Programming with Real-Time Environmental Sensor Data

Suriyaarachchi, H., Denny, P., Cortés, J.P.F., Weerasinghe, C. and Nanayakkara, S.C., 2022. Primary School Students Programming with Real-Time Environmental Sensor Data. In Australasian Computing Education Conference (ACE ’22), February 14–18, 2022, Virtual Event, Australia.

Players and performance: opportunities for social interaction with augmented tabletop games at centres for children with autism

Wu, Q., Xu, R., Liu, V., Lottridge, Danielle., and Nanayakkara, S.C. 2022. Players and performance: opportunities for social interaction with augmented tabletop games at centres for children with autism. Proc. ACM Hum.-Comput. Interact. 6, ISS, Article 563 (December 2022), 24 pages. Honorable Mention Award!

Human–Computer Integration: Towards Integrating the Human Body with the Computational Machine

Mueller, F.F., Semertzidis, N., Andres, J., Weigel, M., Nanayakkara, S.C., Patibanda, R., Li, Z., Strohmeier, P., Knibbe, J., Greuter, S., Obrist, M., Maes, P., Wang, D., Wolf, K., Gerber, L., Marshall, J., Kunze, Kai., Grudin, J., Reiterer, H. and Byrne, R. 2022. Human–Computer Integration: Towards Integrating the Human Body with the Computational Machine. Foundations and Trends® in Human-Computer Interaction, 16(1), pp.1-64.

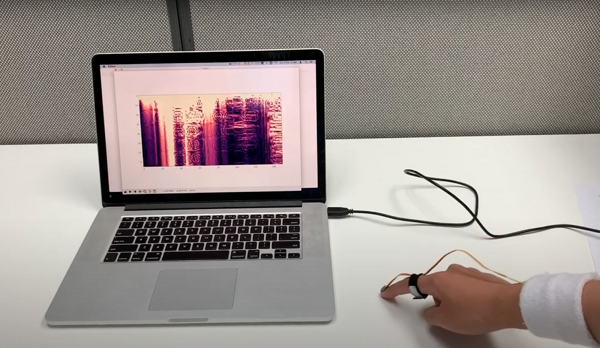

Design and Evaluation of a Mobile Sensing Platform for Water Conductivity

Weerasinghe, C., Padhye, L.P. and Nanayakkara, S.C., 2022, October. Design and Evaluation of a Mobile Sensing Platform for Water Conductivity. In 2022 IEEE Sensors (pp. 1-4). IEEE.

Computational Music Systems for Emotional Health and Wellbeing: A Review

Chua, P., Gupta, C., R Agres, K. and Nanayakkara, S., 2022. Computational Music Systems for Emotional Health and Wellbeing: A Review.

ANISMA: A Prototyping Toolkit to Explore Haptic Skin Deformation Applications Using Shape-Memory Alloys

Messerschmidt M.A., Muthukumarana S., Hamdan N.A., Wagner A., Zhang H., Borchers J., and Nanayakkara S.C. 2022. ANISMA: A Prototyping Toolkit to Explore Haptic Skin Deformation Applications Using Shape-Memory Alloys. ACM Trans. Comput.-Hum. Interact. 29, 3, Article 19 (June 2022), 34 pages.

WasmAndroid: A Cross-Platform Runtime for Native Programming Languages on Android

Wen, E., Weber, G. and Nanayakkara, S.C., 2021. WasmAndroid: A Cross-Platform Runtime for Native Programming Languages on Android (WIP Paper). In Proceedings of the 22nd ACM SIGPLAN/SIGBED International Conference on Languages, Compilers, and Tools for Embedded Systems (LCTES ’21)

StressShoe: A DIY Toolkit for just-in-time Personalised Stress Interventions for Office Workers Performing Sedentary Tasks

Elvitigala, D.S., Scholl, P.M., Suriyaarachchi, H., Dissanayake, V. and Nanayakkara, S.C., 2021. StressShoe: A DIY Toolkit for just-in-time Personalised Stress Interventions for Office Workers Performing Sedentary Tasks. In MobileHCI ’21: The ACM International Conference on Mobile Human-Computer Interaction, September 27–30, 2021, Touluse, France. Honorable Mention Award!

Sensor-Based Interactive Worksheets to Support Guided Scientific Inquiry

Cao, J., Chan, S.W.T., Garbett, D.L., Denny, P., Nassani, A., Scholl, P.M. and Nanayakkara, S.C., 2021. Sensor-Based Interactive Worksheets to Support Guided Scientific Inquiry. In Interaction Design and Children (IDC ’21), June 24–30, 2021, Athens, Greece. ACM, New York, NY, USA, 7 pages. Best Short Paper Award!

OM: A Comprehensive Tool to Elicit Subjective Vibrotactile Expressions Associated with Contextualised Meaning in Our Everyday Lives

Cortés, J.P.F., Suriyaarachchi, H., Nassani, A., Zhang, H. and Nanayakkara, S.C., 2021. OM: A Comprehensive Tool to Elicit Subjective Vibrotactile Expressions Associated with Contextualised Meaning in Our Everyday Lives. In MobileHCI ’21: The ACM International Conference on Mobile Human Computer Interaction, Sept. 27- Oct. 1, 2021, Touluse, France.

mobiLLD: Exploring the Detection of Leg Length Discrepancy and Altering Gait with Mobile Smart Insoles

Matthies, D.J.C., Elvitigala, D.S., Fu, A., Yin, D. and Nanayakkara, S.C., 2021. mobiLLD: Exploring the Detection of Leg Length Discrepancy and Altering Gait with Mobile Smart Insoles. In The 14th PErvasive Technologies Related to Assistive Environments Conference (PETRA 2021), June 29-July 2, 2021, Corfu, Greece.

KinVoices: Using Voices of Friends and Family in Voice Interfaces

Chan, S. W. T., Gunasekaran, T., Pai, Y. S., Zhang, H. and Nanayakkara, S.C. 2021. KinVoices: Using Voices of Friends and Family in Voice Interfaces. Proc. ACM Hum.-Comput. Interact. 5, CSCW2, Article 446 (October 2021), 25 pages.

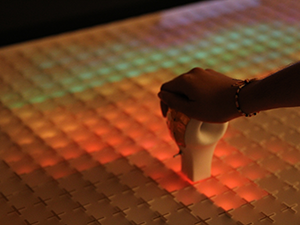

Jammify: Interactive Multi-Sensory System for Digital Art Jamming

Muthukumarana S., Elvitigala D.S., Wu Q., Pai Y.S., Nanayakkara S.C. 2021. Jammify: Interactive Multi-sensory System for Digital Art Jamming. In Human-Computer-Interaction – INTERACT 2021, Springer International Publishing, Cham, 23–41

GymSoles++: Combining Google Glass with Smart Insoles to Improve Body Posture when Performing Squats

Elvitigala, D.S., Matthies, D.J.C, Weerasinghe, C. and Nanayakkara, S.C., 2021. GymSoles++: Combining Google Glass with Smart Insoles to Improve Body Posture when Performing Squats. In The 14th PErvasive Technologies Related to Assistive Environments Conference (PETRA 2021), June 29-July 2, 2021, Corfu, Greece. Best Student Paper Award!

EyeKnowYou: A DIY Toolkit to Support Monitoring Cognitive Load and Actual Screen Time using a Head-Mounted Webcam

Kaluarachchi, T., Sapkota, S., Taradel, J., Thevenon, A., Matthies, D.J.C., and Nanayakkara, S.C., 2021. EyeKnowYou: A DIY Toolkit to Support Monitoring Cognitive Load and Actual Screen Time using a Head-Mounted Webcam. In MobileHCI ’21 Extended Abstracts: The ACM International Conference on Mobile Human Computer Interaction, Sept. 27- Oct. 1, 2021, Touluse, France.

ClothTiles: A Prototyping Platform to Fabricate Customized Actuators on Clothing using 3D Printing and Shape-Memory Alloys

Muthukumarana, S., Messerschmidt, M.A., Matthies, D.J.C., Steimle, J., Scholl, P.M., and Nanayakkara, S.C., 2021. ClothTiles: A Prototyping Platform to Fabricate Customized Actuators on Clothing using 3D Printing and Shape-Memory Alloys. In CHI Conference on Human Factors in Computing Systems (CHI ’21), May 8–13, 2021, Yokohama, Japan.

CapGlasses: Untethered Capacitive Sensing with Smart Glasses

Matthies, D.J.C., Weerasinghe, C., Urban, B., and Nanayakkara, S.C., 2021. CapGlasses: Untethered Capacitive Sensing with SmartGlasses. In Augmented Humans International Conference 2021 (AHs ’21), February 22–24, 2021, Rovaniemi, Finland.

Augmented Foot: A Comprehensive Survey of Augmented Foot Interfaces

Elvitigala, D.S., Nanayakkara, S.C., and Huber, J., 2021. Augmented Foot: A Comprehensive Survey of Augmented Foot Interfaces. In Augmented Humans International Conference 2021 (AHs ’21), February 22–24, 2021, Rovaniemi, Finland.

A Review of Recent Deep Learning Approaches in Human-Centered Machine Learning

Kaluarachchi, T., Reis, A., and Nanayakkara, S.C., 2021. A Review of Recent Deep Learning Approaches in Human-Centered Machine Learning. Sensors. 21(7):2514.

WaveSense: Low Power Voxel-tracking Technique for Resource Limited Devices

Withana, A., Kaluarachchi, T., Singhabahu, C., Ransiri, S., Shi, Y. and Nanayakkara, S.C., 2020. waveSense: Low Power Voxel-tracking Technique for Resource Limited Devices. In AHs ’20: Augmented Humans International Conference (AHs ’20), March 16–17, 2020, Kaiserslautern, Germany.

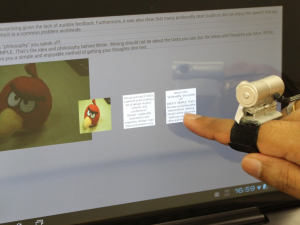

VersaTouch: A Versatile Plug-and-Play System that Enables Touch Interactions on Everyday Passive Surfaces.

Shi, Y., Zhang, H., Cao, J., and Nanayakkara, S.C., 2020. VersaTouch: A Versatile Plug-and-Play System that Enables Touch Interactions on Everyday Passive Surfaces. In AHs ’20: Augmented Humans International Conference (AHs ’20), March 16–17, 2020, Kaiserslautern, Germany.

Touch me Gently: Recreating the Perception of Touch using a Shape-Memory Alloy Matrix

Muthukumarana, S., Elvitigala, D.S., Cortes, J.P.F., Matthies, D.J. and Nanayakkara, S.C., 2020, April. Touch me gently: recreating the perception of touch using a shape-memory alloy matrix. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI '20), April 25–30, 2020, Honolulu, Hawai'i, USA. ACM.

StressFoot: Uncovering the Potential of the Foot for Acute Stress Sensing in Sitting Posture

Elvitigala, D.S., Matthies, D.J.C., and Nanayakkara, S.C., 2020. StressFoot: Uncovering the Potential of the Foot for Acute Stress Sensing in Sitting Posture. Sensors, 20(10), p.2882.

Speech Emotion Recognition ‘in the Wild’ Using an Autoencoder

Dissanayake, V., Zhang, H., Billinghurst, M. and Nanayakkara, S.C., 2020. Speech Emotion Recognition ‘in the wild' using an Autoencoder. Proc. Interspeech 2020, pp.526-530.

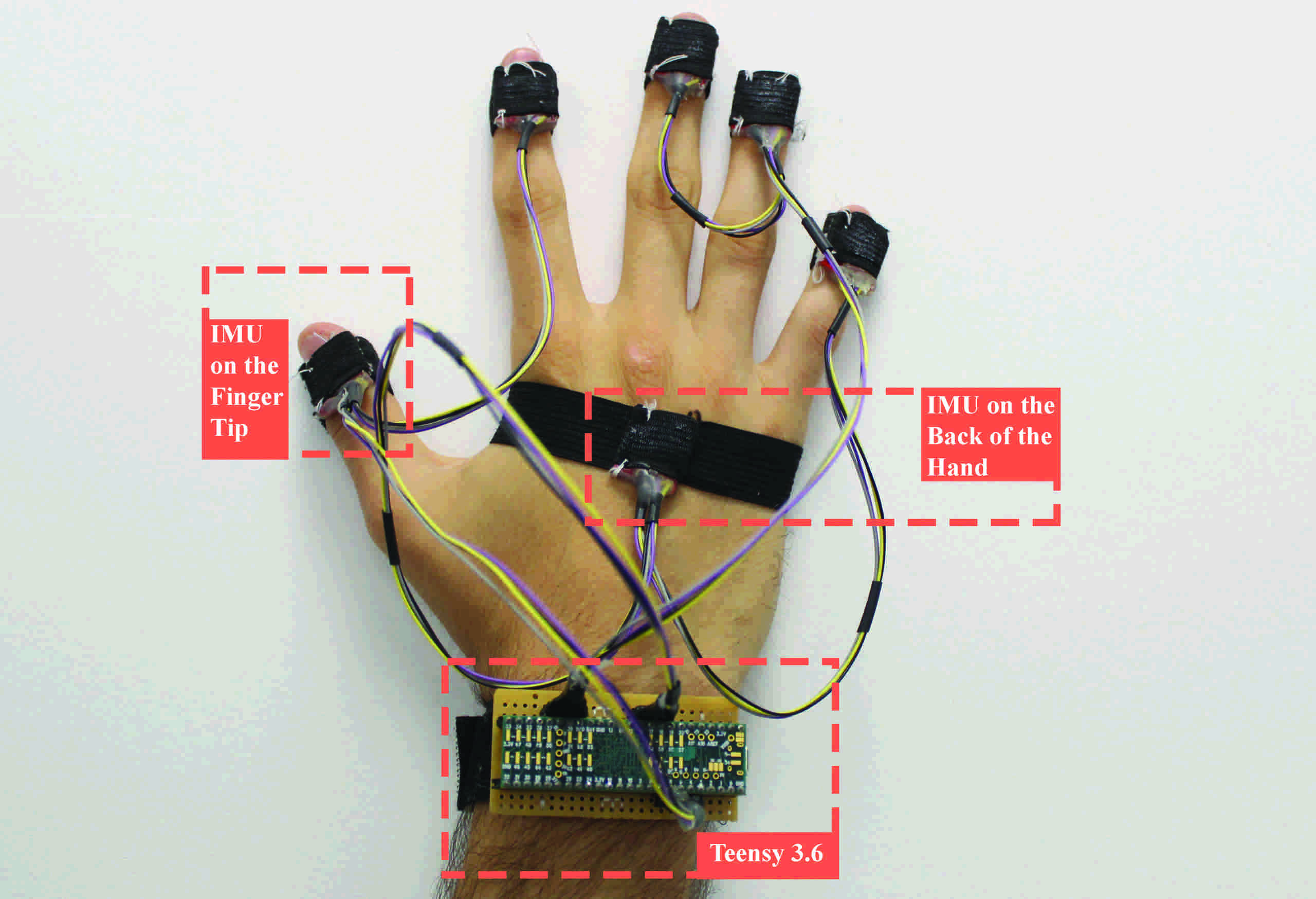

Ready, Steady, Touch!: Sensing Physical Contact with a Finger-Mounted IMU

Shi, Y., Zhang, H., Zhao, K., Cao, J., Sun, M. and Nanayakkara, S.C., 2020. Ready, steady, touch! sensing physical contact with a finger-mounted IMU. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 4(2), pp.1-25.

Prompto: Investigating Receptivity to Prompts Based on Cognitive Load from Memory Training Conversational Agent

Chan, S. W. T., Sapkota, S., Mathews, R., Zhang, H. and Nanayakkara, S. C., 2020. Prompto: Investigating Receptivity to Prompts Based on Cognitive Load from Memory Training Conversational Agent. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 4(4), pp.1-23.

Progression of Cognitive-Affective States During Learning in Kindergarteners: Bringing Together Physiological, Observational and Performance Data

Sridhar, P.K. and Nanayakkara, S.C., 2020. Progression of Cognitive-Affective States During Learning in Kindergarteners: Bringing Together Physiological, Observational and Performance Data. Education Sciences, 10(7), p.177.

Next Steps in Human-Computer Integration

Mueller, F.F., Lopes, P., Strohmeier, P., Ju, W., Seim, C., Weigel, M., Nanayakkara, S.C., Obrist, M., Li, Z., Delfa, J. and Nishida, J., 2020, April. Next Steps for Human-Computer Integration. In Conference on Human Factors in Computing Systems Proceedings (CHI 2020), April 25-30, 2020, Honolulu, Hawai'i, USA. ACM

Multimodal Emotion Recognition With Transformer-Based Self Supervised Feature Fusion

Siriwardhana, S., Kaluarachchi, T., Billinghurst, M. and Nanayakkara, S.C., 2020. Multimodal Emotion Recognition With Transformer-Based Self Supervised Feature Fusion. IEEE Access, 8, pp.176274-176285.

MAGHair: A Wearable System to Create Unique Tactile Feedback by Stimulating Only the Body Hair

Boldu, R., Wijewardena, M., Zhang, H. and Nanayakkara, S.C., 2020, October. MAGHair: A Wearable System to Create Unique Tactile Feedback by Stimulating Only the Body Hair. In 22nd International Conference on Human-Computer Interaction with Mobile Devices and Services (pp. 1-10).

LightTank

Rieger, U., Liu, Y., Boldu, R., Zhang, H., Alwani, H. and Nanayakkara, S.C., 2020. LightTank. In SIGGRAPH Asia 2020 Art Gallery (pp. 1-1).

Jointly Fine-Tuning "BERT-like" Self Supervised Models to Improve Multimodal Speech Emotion Recognition

Siriwardhana, S., Reis, A., Weerasekera, R., and Nanayakkara, S.C., 2020 Jointly Fine-Tuning “BERT-Like” Self Supervised Models to Improve Multimodal Speech Emotion Recognition. Proc. Interspeech 2020, 3755-3759

GymSoles++: Using Smart Wearables to Improve Body Posture when Performing Squats and Dead-Lifts.

Elvitigala D. S., Matthies D. J. C., Weerasinghe C., Shi Y., and Nanayakkara S.C., 2020. GymSoles++: Using Smart Wearables to Improve Body Posture when Performing Squats and Dead-Lifts. In AHs ’20: Augmented Humans International Conference (AHs ’20), March 16–17, 2020, Kaiserslautern, Germany.

AiSee: An Assistive Wearable Device to Support Visually Impaired Grocery Shoppers

Boldu, R., Matthies, D.J., Zhang, H. and Nanayakkara, S.C., 2020. AiSee: An Assistive Wearable Device to Support Visually Impaired Grocery Shoppers. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 4(4), pp.1-25.

Prospero: A Personal Wearable Memory Coach

Chan, S. W. T., Zhang, H. and Nanayakkara, S. C., 2019, March. Prospero: A Personal Wearable Memory Coach. In Proceedings of the 10th Augmented Human International Conference 2019 (pp. 1-5).

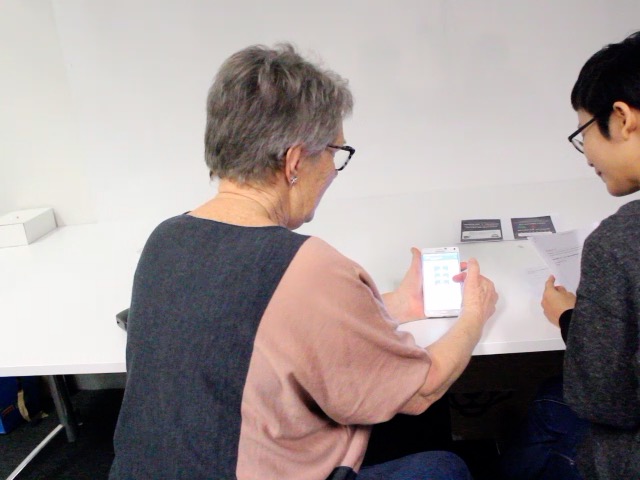

ProspecFit: In Situ Evaluation of Digital Prospective Memory Training for Older Adults

Chan, S. W. T., Buddhika, T., Zhang, H. and Nanayakkara, S. C., 2019. ProspecFit: In Situ Evaluation of Digital Prospective Memory Training for Older Adults. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 3(3), pp.1-20.

PhantomTouch: Creating an Extended Reality by the Illusion of Touch using a Shape-Memory Alloy Matrix

Muthukumarana, S., Elvitigala, D.S., Cortes, J.P.F., Matthies, D.J. and Nanayakkara, S.C., 2019, November. PhantomTouch: Creating an Extended Reality by the Illusion of Touch using a Shape-Memory Alloy Matrix. In SIGGRAPH Asia 2019 XR (pp. 29-30). ACM.

oSense: Object-Activity Identification Based on Gasping Posture and Motion

Buddhika, T., Zhang, H., Weerasinghe, C., Nanayakkara, S.C. and Zimmermann, R., 2019, March. OSense: Object-activity identification based on gasping posture and motion. In Proceedings of the 10th Augmented Human International Conference 2019 (pp. 1-5).

M-Hair: Extended Reality by Stimulating the Body Hair

Boldu, R., Jain, S., Cortes, J.P.F., Zhang, H. and Nanayakkara, S.C., 2019. M-Hair: Extended Reality by Stimulating the Body Hair. In SIGGRAPH Asia 2019 XR (pp. 27-28).

M-Hair: Creating Novel Tactile Feedback by Augmenting the Body Hair to Respond to Magnetic Field

Boldu, R., Jain, S., Forero Cortes, J.P., Zhang, H. and Nanayakkara, S.C., 2019, October. M-Hair: Creating Novel Tactile Feedback by Augmenting the Body Hair to Respond to Magnetic Field. In Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology (pp. 323-328).

GymSoles: Improving Squats and Dead-Lifts by Visualizing the User’s Centre of Pressure

Elvitigala, D.S., Matthies, D.J., David, L., Weerasinghe, C. and Nanayakkara, S.C., 2019, May. GymSoles: Improving Squats and Dead-Lifts by Visualizing the User's Center of Pressure. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (pp. 1-12).

Going beyond performance scores: Understanding cognitive-affective states in Kindergarteners and application of framework in classrooms

Sridhar, P. K., Chan, S. W. T., Chua, Y., Quin, Y. W. and Nanayakkara, S. C., 2019. Going beyond performance scores: Understanding cognitive-affective states in Kindergarteners and application of framework in classrooms. International Journal of Child-Computer Interaction, 21, pp.37-53.

fSense: Unlocking the Dimension of Force for Gestural Interactions using Smartwatch PPG Sensor

Buddhika, T., Zhang, H., Chan, S. W. T., Dissanayake, V., Nanayakkara, S.C. and Zimmermann, R., 2019, March. fSense: unlocking the dimension of force for gestural interactions using smartwatch PPG sensor. In Proceedings of the 10th Augmented Human International Conference 2019 (pp. 1-5).

Evaluating IVR in Primary School Classrooms

Chua, Y., Sridhar, P.K., Zhang, H., Dissanayake, V. and Nanayakkara, S.C., 2019, October. Evaluating IVR in Primary School Classrooms. In 2019 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) (pp. 169-174). IEEE.

CricketCoach: Towards Creating a Better Awareness of Gripping Forces for Cricketers

Muthukumarana, S., Matthies, D.J., Weerasinghe, C., Elvitigala, D.S. and Nanayakkara, S.C., 2019, March. CricketCoach: Towards Creating a Better Awareness of Gripping Forces for Cricketers. In Proceedings of the 10th Augmented Human International Conference 2019 (pp. 1-2).

CompRate: Power Efficient Heart Rate and Heart Rate Variability Monitoring on Smart Wearables

Dissanayake, V., Elvitigala, D.S., Zhang, H., Weerasinghe, C. and Nanayakkara, S.C., 2019, November. CompRate: Power Efficient Heart Rate and Heart Rate Variability Monitoring on Smart Wearables. In 25th ACM Symposium on Virtual Reality Software and Technology (pp. 1-8).

ChewIt. An Intraoral Interface for Discreet Interactions.

Cascón, P.C., Matthies, D.J., Muthukumarana, S. and Nanayakkara, S.C, 2019, May. ChewIt. An Intraoral Interface for Discreet Interactions. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (pp. 1-13).

CapMat: A Smart Foot Mat for User Authentication

Matthies, D.J., Elvitigala, D.S., Muthukumarana, S., Huber, J. and Nanayakkara, S.C., 2019, March. CapMat: a smart foot mat for user authentication. In Proceedings of the 10th Augmented Human International Conference 2019 (pp. 1-2).

2bit-TactileHand: Evaluating Tactons for Nobody Vibrotactile Displays on the Hand and Wrist.

Elvitigala, D.S., Matthies, D.J., Dissanayaka, V., Weerasinghe, C. and Nanayakkara, S.C., 2019, March. 2bit-TactileHand: Evaluating Tactons for On-Body Vibrotactile Displays on the Hand and Wrist. In Proceedings of the 10th Augmented Human International Conference 2019 (pp. 1-8).

Visual Field Visualizer: Easier & Scalable way to be Aware of the Visual Field

Nguyen, N.T., Nanayakkara, S.C. and Lee, H., 2018, February. Visual field visualizer: easier & scalable way to be aware of the visual field. In Proceedings of the 9th Augmented Human International Conference (pp. 1-3).

Triangulation of Physiological, Behavioural and Observational Data offers better insights into cognitive-emotional states in learning

Sridhar, P.K., Quin, Y.W., and Nanayakkara, S.C., 2018. Triangulation of Physiological, Behavioural and Observational Data offers better insights into cognitive-emotional states in learning. Presented in the Annual Convention of Association for Psychological Science. San Francisco.

Thumb-In-Motion: Evaluating Thumb to Ring Microgestures for Athletic Activity

Boldu, R., Dancu, A., Matthies, D.J., Cascón, P.G., Ransir, S. and Nanayakkara, S.C., 2018, October. Thumb-In-Motion: Evaluating Thumb-to-Ring Microgestures for Athletic Activity. In Proceedings of the Symposium on Spatial User Interaction (pp. 150-157).

Target Driven Visual Navigation with Hybrid Asynchronous Universal Successor Representations

Siriwardhana, S., Weerasekera, R. and Nanayakkara, S.C, 2018, Target Driven Visual Navigation with Hybrid Asynchronous Universal Successor Representations. Deep RL Workshop NeurIPS 2018.

Supporting Rhythm Activities of Deaf Children using Music-Sensory-Substitution Systems.

Petry, B., Illandara, T., Elvitigala, D.S. and Nanayakkara, S.C., 2018, April. Supporting rhythm activities of deaf children using music-sensory-substitution systems. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (pp. 1-10).

Step Detection for Rollator Users with Smartwatches

Matthies, D.J., Haescher, M., Nanayakkara, S.C. and Bieber, G., 2018, October. Step detection for rollator users with smartwatches. In Proceedings of the Symposium on Spatial User Interaction (pp. 163-167).

Hand Range Interface: Information Always at Hand with a Body-Centric Mid-Air Input Surface

Xu, X., Dancu, A., Maes, P. and Nanayakkara, S.C., 2018, September. Hand range interface: Information always at hand with a body-centric mid-air input surface. In Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services (pp. 1-12).

Going beyond performance scores: understanding cognitive-affective states in kindergarteners

Sridhar, P. K., Chan, S. W. T. and Nanayakkara, S.C., 2018. Going beyond performance scores: understanding cognitive-affective states in kindergarteners. In Proceedings of the 17th ACM Conference on Interaction Design and Children (pp. 253-265). ACM.

GestAKey: Touch Interaction on Individual Keycaps.

Shi, Y., Zhang, H., Rajapakse, H., Perera, N.T., Vega Gálvez, T. and Nanayakkara, S.C., 2018, April. Gestakey: Touch interaction on individual keycaps. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (pp. 1-12).

FingerReader2.0: Designing and Evaluating a Wearable Finger-WornCamera to Assist People with Visual Impairments while Shopping,

Boldu, R., Dancu, A., Matthies, D. J.C., Buddhika, T., Siriwardhana, S., & Nanayakkara, S.C., 2018. FingerReader2. 0: Designing and Evaluating a Wearable Finger-Worn Camera to Assist People with Visual Impairments while Shopping. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 2(3), 94. ACM.

Doodle Daydream: An Interactive Display to Support Playful and Creative Interactions Between Co-workers

Elvitigala, S., Chan, S. W. T., Howell, N., Matthies, D. J. and Nanayakkara, S. C., 2018, October. Doodle Daydream: An Interactive Display to Support Playful and Creative Interactions Between Co-workers. In Proceedings of the Symposium on Spatial User Interaction (pp. 186-186).

Development of a Triangulated Framework Understand Cognitive-Emotional States during Learning in Children

Sridhar, P.K., Nanayakkara S.C., 2018. Development of a Triangulated Framework Understand Cognitive-Emotional States during Learning in Children. In Proceedings of International Conference of Learning Sciences and Early Childhood Education.

Assistive Augmentation

Huber, J., Shilkrot, R., Maes, P. and Nanayakkara, S.C. eds., 2018. Assistive augmentation. Springer.

UTAP-Unique Topographies for Acoustic Propagation: Designing Algorithmic Waveguides for Sensing in Interactive Malleable Interfaces

Rod, J., Collins, D., Wessolek, D., Ilandara, T., Ai, Y., Lee, H. and Nanayakkara, S.C., 2017, March. UTAP-Unique Topographies for Acoustic Propagation: Designing Algorithmic Waveguides for Sensing in Interactive Malleable Interfaces. In Proceedings of the Eleventh International Conference on Tangible, Embedded, and Embodied Interaction (pp. 141-152).

Towards Understanding of Play with Augmented Toys

Sridhar, P.K., Nanayakkara, S.C. and Huber, J., 2017, March. Towards understanding of play with augmented toys. In Proceedings of the 8th Augmented Human International Conference (pp. 1-4).

SonicSG: From Floating to Sounding Pixels

Nanayakkara, S.C., Schroepfer, T., Wyse, L., Lian, A. and Withana, A., 2017, March. SonicSG: from floating to sounding pixels. In Proceedings of the 8th Augmented Human International Conference (pp. 1-5).

Scaffolding the Music Listening and Music Making Experience for the Deaf

Petry, B., Huber, J. and Nanayakkara, S.C., 2018. Scaffolding the Music Listening and Music Making Experience for the Deaf. In Assistive Augmentation (pp. 23-48). Springer, Singapore.

InSight: A Systematic Approach to Create Dynamic Human-Controller-Interactions

Boldu, R., Zhang, H., Cortés, J.P.F., Muthukumarana, S. and Nanayakkara, S.C., 2017, March. Insight: a systematic approach to create dynamic human-controller-interactions. In Proceedings of the 8th Augmented Human International Conference (pp. 1-5). Best Short Paper Award!

GrabAmps: Grab a Wire to Sense the Current Flow

Elvitigala, D.S., Peiris, R., Wilhelm, E., Foong, S. and Nanayakkara, S.C., 2017, March. GrabAmps: grab a wire to sense the current flow. In Proceedings of the 8th Augmented Human International Conference (pp. 1-4).

GestAKey: Get More Done with Just-a-Key on a Keyboard

Shi, Y., Vega Gálvez, T., Zhang, H. and Nanayakkara, S.C., 2017, October. Gestakey: Get more done with just-a-key on a keyboard. In Adjunct Publication of the 30th Annual ACM Symposium on User Interface Software and Technology (pp. 73-75).

GesCAD: an Intuitive Interface for Conceptual Architectural Design

Khan, S., Rajapakse, H., Zhang, H., Nanayakkara, S.C., Tuncer, B. and Blessing, L., 2017, November. GesCAD: an intuitive interface for conceptual architectural design. In Proceedings of the 29th Australian conference on computer-human interaction (pp. 402-406).

Working with physiotherapists: Lessons learned from project SHRUG.

Shridhar, P. and Nanayakkara, S.C., Working with physiotherapists: Lessons learned from project shrug. In Workshop paper in Annual Meeting of the Australian Special Interest Group for Computer Human Interaction (OzCHI’16), NY, USA.

waveSense: Ultra Low Power Gesture Sensing Based on Selective Volumetric Illumination

Withana, A., Ransiri, S., Kaluarachchi, T., Singhabahu, C., Shi, Y., Elvitigala, S., Nanayakkara, S.C., 2016. waveSense: Ultra Low Power Gesture Sensing Based on Selective Volumetric Illumination, In proceedings of the 29th Annual Symposium on User Interface Software and Technology (UIST '16)

Towards One-Pixel-Displays for Sound Information Visualization

Sridhar, P.K., Petry, B., Pakianathan, P.V., Kartolo, A.S. and Nanayakkara, S.C., 2016, November. Towards one-pixel-displays for sound information visualization. In Proceedings of the 28th Australian Conference on Computer-Human Interaction (pp. 91-95).

Towards Development and Evaluation of Tangible Interfaces that Support Learning in Children.

Sridhar, P.K., Nanayakkara, S.C., 2016. Towards Development and Evaluation of Tangible Interfaces that Support Learning in Children. In Proceedings of Annual Meeting of the Australian Special Interest Group for Computer Human Interaction (OzCHI '16). ACM, New York, NY, USA.

SwimSight: Supporting Deaf Users to Participate in Swimming Games

Elvitigala, D.S., Wessolek, D., Achenbach, A.V., Singhabahu, C. and Nanayakkara, S.C., 2016, November. SwimSight: Supporting deaf users to participate in swimming games. In Proceedings of the 28th Australian Conference on Computer-Human Interaction (pp. 567-570).

PostBits: using contextual locations for embedding cloud information in the home

Pablo, J., Fernando, P., Sridhar, P., Withana, A., Nanayakkara, S.C., Steimle, J. and Maes, P., 2016. PostBits: using contextual locations for embedding cloud information in the home. Personal and Ubiquitous Computing, 20(6), pp.1001-1014.

MuSS-Bits: Sensor-Display Blocks for Deaf People to Explore Musical Sounds

Petry, B., Illandara, T. and Nanayakkara, S.C., 2016, November. MuSS-bits: sensor-display blocks for deaf people to explore musical sounds. In Proceedings of the 28th Australian Conference on Computer-Human Interaction (pp. 72-80).

MuSS-Bits Provisional Patent

Petry, B., Forero, J.P., Nanayakkara, S.C., 2016, Muss-bits – music-sensory-substitution bits. Singapore Provisional Patent Application: 10201610020P. Filing Date: 29 November 2016.

Electrosmog Visualization through Augmented Blurry Vision

Fan, K., Seigneur, J.M., Nanayakkara, S.C. and Inami, M., 2016, February. Electrosmog visualization through augmented blurry vision. In Proceedings of the 7th Augmented Human International Conference 2016 (pp. 1-2).

Augmented Winter Ski with AR HMD

Fan, K., Seigneur, J.M., Guislain, J., Nanayakkara, S.C. and Inami, M., 2016, February. Augmented winter ski with ar hmd. In Proceedings of the 7th Augmented Human International Conference 2016 (pp. 1-2).

ArmSleeve: A Patient Monitoring System to Support Occupational Therapists in Stroke Rehabilitation

Ploderer, B., Fong, J., Withana, A., Klaic, M., Nair, S., Crocher, V., Vetere, F. and Nanayakkara, S.C., 2016, June. ArmSleeve: a patient monitoring system to support occupational therapists in stroke rehabilitation. In Proceedings of the 2016 ACM Conference on Designing Interactive Systems (pp. 700-711).

Ad-Hoc Access to Musical Sound for Deaf Individuals

Petry, B., Illandara, T., Forero, J.P. and Nanayakkara, S.C., 2016, October. Ad-Hoc Access to Musical Sound for Deaf Individuals. In Proceedings of the 18th International ACM SIGACCESS Conference on Computers and Accessibility (pp. 285-286).

zSense: Enabling Shallow Depth Gesture Recognition for Greater Input Expressivity on Smart Wearables

Withana, A., Peiris, R., Samarasekara, N. and Nanayakkara, S.C., 2015, April. zsense: Enabling shallow depth gesture recognition for greater input expressivity on smart wearables. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (pp. 3661-3670).

SHRUG: stroke haptic rehabilitation using gaming

Peiris, R.L., Wijesinghe, V., and Nanayakkara, S.C., 2015, SHRUG: stroke haptic rehabilitation using gaming. In Proceedings of the 6th Augmented Human International Conference (AH '15). ACM, New York, NY, USA, 213-214.

RippleTouch: Initial Exploration of a Wave Resonant Based Full Body Haptic Interface

Withana, A., Koyama, S., Saakes, D., Minamizawa, K., Inami, M. and Nanayakkara, S.C., 2015, March. RippleTouch: initial exploration of a wave resonant based full body haptic interface. In Proceedings of the 6th Augmented Human International Conference (pp. 61-68).

FootNote: designing a cost effective plantar pressure monitoring system for diabetic foot ulcer prevention

Yong, K.F., Forero, J.P., Foong, S. and Nanayakkara, S.C., 2015, March. FootNote: designing a cost effective plantar pressure monitoring system for diabetic foot ulcer prevention. In Proceedings of the 6th Augmented Human International Conference (pp. 167-168).

FingerReader: A Wearable Device to Explore Printed Text on the Go

Shilkrot, R., Huber, J., Meng Ee, W., Maes, P. and Nanayakkara, S.C., 2015, April. FingerReader: a wearable device to explore printed text on the go. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (pp. 2363-2372).

Feel & See the Globe: A Thermal, Interactive Installation

Huber, J., Malavipathirana, H., Wang, Y., Li, X., Fu, J.C., Maes, P. and Nanayakkara, S.C., 2015, March. Feel & see the globe: a thermal, interactive installation. In Proceedings of the 6th Augmented Human International Conference (pp. 215-216).

BWard: An Optical Approach for Reliable in-situ Early Blood Leakage Detection at Catheter Extraction Points

Cortés, J.P., Ching, T.H., Wu, C., Chionh, C.Y., Nanayakkara, S.C. and Foong, S., 2015, July. Bward: An optical approach for reliable in-situ early blood leakage detection at catheter extraction points. In 2015 IEEE 7th International Conference on Cybernetics and Intelligent Systems (CIS) and IEEE Conference on Robotics, Automation and Mechatronics (RAM) (pp. 232-237). IEEE.

Workshop on Assistive Augmentation

Huber, J., Rekimoto, J., Inami, M., Shilkrot, R., Maes, P., Meng Ee, W., Pullin, G. and Nanayakkara, S.C., 2014. Workshop on assistive augmentation. In CHI'14 Extended Abstracts on Human Factors in Computing Systems (pp. 103-106).

SpiderVision: Extending the Human Field of View for Augmented Awareness

Fan, K., Huber, J., Nanayakkara, S.C. and Inami, M., 2014, March. SpiderVision: extending the human field of view for augmented awareness. In Proceedings of the 5th augmented human international conference (pp. 1-8).

SparKubes: Exploring the Interplay between Digital and Physical Spaces with Minimalistic Interfaces

Ortega-Avila, S., Huber, J., Janaka, N., Withana, A., Fernando, P. and Nanayakkara, S.C., 2014, December. SparKubes: exploring the interplay between digital and physical spaces with minimalistic interfaces. In Proceedings of the 26th Australian Computer-Human Interaction Conference on Designing Futures: the Future of Design (pp. 204-207).

SHRUG: stroke haptic rehabilitation using gaming

Peiris, R.L., Janaka, N., De Silva, D. and Nanayakkara, S.C, 2014, December. SHRUG: stroke haptic rehabilitation using gaming. In Proceedings of the 26th Australian Computer-Human Interaction Conference on Designing Futures: the Future of Design (pp. 380-383).

PaperPixels: a Toolkit to Create Paper Based Displays

Peiris, R.L. and Nanayakkara, S.C., 2014, December. PaperPixels: a toolkit to create paper-based displays. In Proceedings of the 26th Australian Computer-Human Interaction Conference on Designing Futures: the Future of Design (pp. 498-504).

nZwarm: a swarm of luminous sea creatures that interact with passers-by

Nanayakkara, S.C., Schroepfer, T., Withana, A., Wortmann, T. and Pablo, J., 2014. nZwarm: a swarm of luminous sea creatures that interact with passers-by. Wellington LUX.

Keepers & Bees: an interactive light-art installation of interactive critters that visitors can interact with in real time via their smartphones

Schroepfer, T., Nanayakkara, S. C., Withana, A., Wortmann, T. , Cornelius, A., Khew, Y.N., Lian, A. 2014. Keepers & Bees: an interactive light-art installation of interactive critters that visitors can interact with in real time via their smartphones. In Singapore, Archifest 2014 (Keepers: Singapore Designer Collaborative, Orchard Green,Singapore, 26 Sep - 11 October, 2014).

iSwarm: a swarm of luminous sea creatures that interact with passers-by

Schroepfer, T., Nanayakkara, S. C., A., Wortmann, T., Cornelius, A., Khew, Y.N. and Lian, A., Yeo K.P., Peiris, R.L., Withana, A., Werry. I., Petry, B., Otega, S., Janaka, N., Elvitigala, S., Fernando, P., Samarasekara, N., 2014. iSwarm: a swarm of luminous sea creatures that interact with passers-by. In Singapore iLight 2014 (Marina Bay, Singapore, 7- 30 March, 2014).

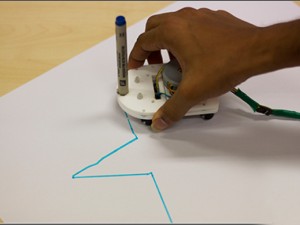

I-Draw: Towards a Freehand Drawing Assistant

Fernando, P., Peiris, R.L. and Nanayakkara, S.C., 2014, December. I-draw: Towards a freehand drawing assistant. In Proceedings of the 26th Australian Computer-Human Interaction Conference on Designing Futures: the Future of Design (pp. 208-211).

FingerReader: A Wearable Device to Support Text-Reading on the Go

Shilkrot, R., Huber, J., Liu, C., Maes, P. and Nanayakkara, S.C., 2014. FingerReader: a wearable device to support text reading on the go. In CHI'14 Extended Abstracts on Human Factors in Computing Systems (pp. 2359-2364).

EarPut: Augmenting Ear-worn Devices for Ear-based Interaction

Lissermann, R., Huber, J., Hadjakos, A., Nanayakkara, S.C. and Mühlhäuser, M., 2014, December. EarPut: augmenting ear-worn devices for ear-based interaction. In Proceedings of the 26th Australian Computer-Human Interaction Conference on Designing Futures: the Future of Design (pp. 300-307).

Birdie: Towards a true flying experience

Petry, B., Ortega-Avila, S., Nanayakkara, S.C., and Foong, S., 2014, Birdie: Towards a true flying experience. In Workshop on Assistive Augmentation, Extended Abstracts of the 32nd Annual SIGCHI Conference on Human Factors in Computing Systems (CHI14). Toronto, Canada.

A Wearable Text-Reading Device for the Visually-Impaired

Shilkrot, R., Huber, J., Liu, C., Maes, P. and Nanayakkara, S.C., 2014. A wearable text-reading device for the visually-impaired. In CHI'14 Extended Abstracts on Human Factors in Computing Systems (pp. 193-194).

StickEar: Making Everyday Objects Respond to Sound

Yeo, K.P., Nanayakkara, S.C. and Ransiri, S., 2013, October. StickEar: making everyday objects respond to sound. In Proceedings of the 26th annual ACM symposium on User interface software and technology (pp. 221-226).

StickEar: Augmenting Objects and Places Wherever Whenever

Yeo, K.P. and Nanayakkara, S.C., 2013. StickEar: augmenting objects and places wherever whenever. In CHI'13 Extended Abstracts on Human Factors in Computing Systems (pp. 751-756).

SpeechPlay: Composing and Sharing Expressive Speech Through Visually Augmented Text.

Yeo, K.P. and Nanayakkara, S.C., 2013, November. SpeechPlay: composing and sharing expressive speech through visually augmented text. In Proceedings of the 25th Australian Computer-Human Interaction Conference: Augmentation, Application, Innovation, Collaboration (pp. 565-568).

SmartFinger: Connecting Devices, Objects and People seamlessly

Ransiri, S., Peiris, R.L., Yeo, K.P. and Nanayakkara, S.C., 2013, November. SmartFinger: connecting devices, objects and people seamlessly. In Proceedings of the 25th Australian Computer-Human Interaction Conference: Augmentation, Application, Innovation, Collaboration (pp. 359-362).

SmartFinger: An Augmented Finger as a Seamless ‘Channel’ between Digital and Physical Objects

Ransiri, S. and Nanayakkara, S.C., 2013, March. SmartFinger: an augmented finger as a seamless 'channel' between digital and physical objects. In Proceedings of the 4th Augmented Human International Conference (pp. 5-8).

FingerDraw: More than a Digital Paintbrush

Hettiarachchi A., Nanayakkara S. C., Yeo K.P., Shilkrot R. and Maes P. ”FingerDraw: More than a Digital Paintbrush”, ACM SIGCHI Augmented Human, March, 2013.

EyeRing: A Finger Worn Input Device for Seamless Interactions with our Surroundings

Nanayakkara S. C., Shilkrot R. Yeo K.P. and Maes P.”EyeRing: A Finger Worn Input Device for Seamless Interactions with our Surroundings”, ACM SIGCHI Augmented Human, March, 2013.

Enhancing Musical Experience for the Hearing-impaired using Visual and Haptic Displays

Nanayakkara S.C., Wyse L., Ong S.H. and Taylor E. “Enhancing Musical Experience for the Hearing-impaired using Visual and Haptic Inputs”, Human-Computer Interaction, 28 (2), pp.115-160, 2013.

AugmentedForearm: Exploring the Design Space of a Display-enhanced Forearm

Olberding S., Yeo K.P., Nanayakkara S.C. and Steimle J. “AugmentedForearm: Exploring the Design Space of a Display-enhanced Forearm”, ACM SIGCHI Augmented Human, March, 2013.

WatchMe: Wrist-worn interface that makes remote monitoring seamless

Ransiri S. and Nanayakkara S. C. “WatchMe: Wrist-worn interface that makes remote monitoring seamless”, ASSETS, 2012.

The Haptic Chair as a Speech Training Aid for the Deaf

Nanayakkara, S.C., Wyse, L. and Taylor, E.A., 2012, November. The haptic chair as a speech training aid for the deaf. In Proceedings of the 24th Australian Computer-Human Interaction Conference (pp. 405-410).

Palm-area sensitivity to vibrotactile stimuli above 1 kHz

Wyse L., Nanayakkara S.C., Seekings, P., Ong S.H. and Taylor E. “Palm-area sensitivity to vibrotactile stimuli above 1 kHz”, NIME’12.

EyeRing: An Eye on a Finger

Nanayakkara S. C., Shilkrot R. and Maes P., 2012, EyeRing: An Eye on a Finger, CHI Interactivity (Research), May. 2012.

EyeRing: A Finger-worn Assistant

Nanayakkara, S.C., Shilkrot, R. and Maes, P., 2012. EyeRing: a finger-worn assistant. In CHI'12 extended abstracts on human factors in computing systems (pp. 1961-1966).

Effectiveness of the Haptic Chair in Speech Training

Nanayakkara, S.C., Wyse, L. and Taylor, E.A., 2012, October. Effectiveness of the haptic chair in speech training. In Proceedings of the 14th international ACM SIGACCESS conference on Computers and accessibility (pp. 235-236).

Touch and Copy, Touch and Paste

Mistry, P., Nanayakkara, S.C., and Maes, P., 2011. Touch and copy, touch and paste. In CHI'11 Extended Abstracts on Human Factors in Computing Systems (pp. 1095-1098).

The effect of visualizing audio targets in a musical listening and performance task

Wyse, L., Mitani, N. and Nanayakkara, S.C., 2011. The Effect of Visualizing Audio Targets in a Musical Listening and Performance Task. In NIME (pp. 304-307).

SPARSH: Passing Data using the Body as a Medium

Mistry, P., Nanayakkara, S.C. and Maes, P., 2011, March. Sparsh: Passing data using the body as a medium. In Proceedings of the ACM 2011 conference on Computer supported cooperative work (pp. 689-692).

Biases and interaction effects in gestural acquisition of auditory targets using a hand-held device

Wyse, L., Nanayakkara, S.C. and Mitani, N., 2011, November. Biases and interaction effects in gestural acquisition of auditory targets using a hand-held device. In Proceedings of the 23rd Australian Computer-Human Interaction Conference (pp. 315-318).

An enhanced musical experience for the deaf: design and evaluation of a music display and a haptic chair

Nanayakkara, S. C., Taylor, E., Wyse, L. and Ong, S. H. 2009. An enhanced musical experience for the deaf: design and evaluation of a music display and a haptic chair. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI '09). Association for Computing Machinery, New York, NY, USA, 337–346.