Lonce Wyse

Associate Professor

Lonce Wyse is a Associate Professor at the Department of Communication and New Media, National University of Singapore. He directs the IDMI Arts and Creativity Lab with its focus on sound in music and the arts. For his PhD (1994) in Cognitive and Neural Systems from Boston University he developed a computational model of musical pitch perception. He joined the Institute of Incomm Research in Singapore in 1994 where he designed and developed interactive sound systems. He is currently with the faculty of Communications and New Media at the National University of Singapore teaching in Sonic Arts and Media Aesthetics, and pursuing research in electroacoustic music creation, theory, and psychology.

More about Lonce: www.zwhome.org/~lonce/

Publications

Example-Based Framework for Perceptually Guided Audio Texture Generation

Kamath, P., Gupta, C., Wyse, L., and Nanayakkara, S.C., “Example-Based Framework for Perceptually Guided Audio Texture Generation,” in IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 32, pp. 2555-2565, 2024.

Towards Controllable Audio Texture Morphing

Gupta C*., Kamath P*., Wei. Y., Li, Z., Nanayakkara S.C., Wyse L. 2023. Towards Controllable Audio Texture Morphing. In 48th IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP '23), 4-10 June, 2023, Rhodes Island, Greece.

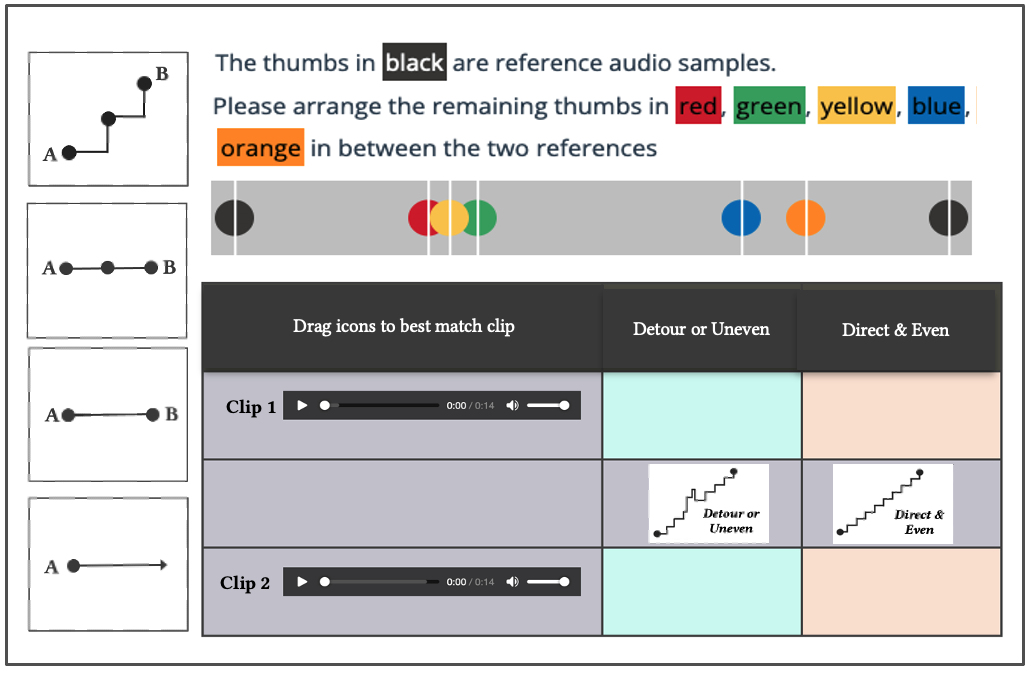

Evaluating Descriptive Quality of AI-Generated Audio Using Image-Schemas

Kamath, P., Li, Z., Gupta, C., Jaidka, K., Nanayakkara, S.C. and Wyse, L. 2023. Evaluating Descriptive Quality of AI Generated Audio Using Image-Schemas. In 28th International Conference on Intelligent User Interfaces (IUI ’23), March 27–31, 2023, Sydney, NSW, Australia.

SonicSG: From Floating to Sounding Pixels

Nanayakkara, S.C., Schroepfer, T., Wyse, L., Lian, A. and Withana, A., 2017, March. SonicSG: from floating to sounding pixels. In Proceedings of the 8th Augmented Human International Conference (pp. 1-5).

Enhancing Musical Experience for the Hearing-impaired using Visual and Haptic Displays

Nanayakkara S.C., Wyse L., Ong S.H. and Taylor E. “Enhancing Musical Experience for the Hearing-impaired using Visual and Haptic Inputs”, Human-Computer Interaction, 28 (2), pp.115-160, 2013.

The Haptic Chair as a Speech Training Aid for the Deaf

Nanayakkara, S.C., Wyse, L. and Taylor, E.A., 2012, November. The haptic chair as a speech training aid for the deaf. In Proceedings of the 24th Australian Computer-Human Interaction Conference (pp. 405-410).

Palm-area sensitivity to vibrotactile stimuli above 1 kHz

Wyse L., Nanayakkara S.C., Seekings, P., Ong S.H. and Taylor E. “Palm-area sensitivity to vibrotactile stimuli above 1 kHz”, NIME’12.

Effectiveness of the Haptic Chair in Speech Training

Nanayakkara, S.C., Wyse, L. and Taylor, E.A., 2012, October. Effectiveness of the haptic chair in speech training. In Proceedings of the 14th international ACM SIGACCESS conference on Computers and accessibility (pp. 235-236).

The effect of visualizing audio targets in a musical listening and performance task

Wyse, L., Mitani, N. and Nanayakkara, S.C., 2011. The Effect of Visualizing Audio Targets in a Musical Listening and Performance Task. In NIME (pp. 304-307).

Biases and interaction effects in gestural acquisition of auditory targets using a hand-held device

Wyse, L., Nanayakkara, S.C. and Mitani, N., 2011, November. Biases and interaction effects in gestural acquisition of auditory targets using a hand-held device. In Proceedings of the 23rd Australian Computer-Human Interaction Conference (pp. 315-318).

An enhanced musical experience for the deaf: design and evaluation of a music display and a haptic chair

Nanayakkara, S. C., Taylor, E., Wyse, L. and Ong, S. H. 2009. An enhanced musical experience for the deaf: design and evaluation of a music display and a haptic chair. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI '09). Association for Computing Machinery, New York, NY, USA, 337–346.